Why Grid Frequency Matters

How grid operators maintain balance, and where renewable energy fits in.

As you may have heard, Spain and Portugal experienced a massive grid blackout on April 28. This is among the largest power outages in recent European history, affecting nearly 60 million people in the Iberian peninsula.

The national authorities and transmission system operators will take weeks to perform a detailed forensic analysis, as the exact cause is still unclear as of April 30. However, the trigger for the nation-wide power shutdown seems to be clear:

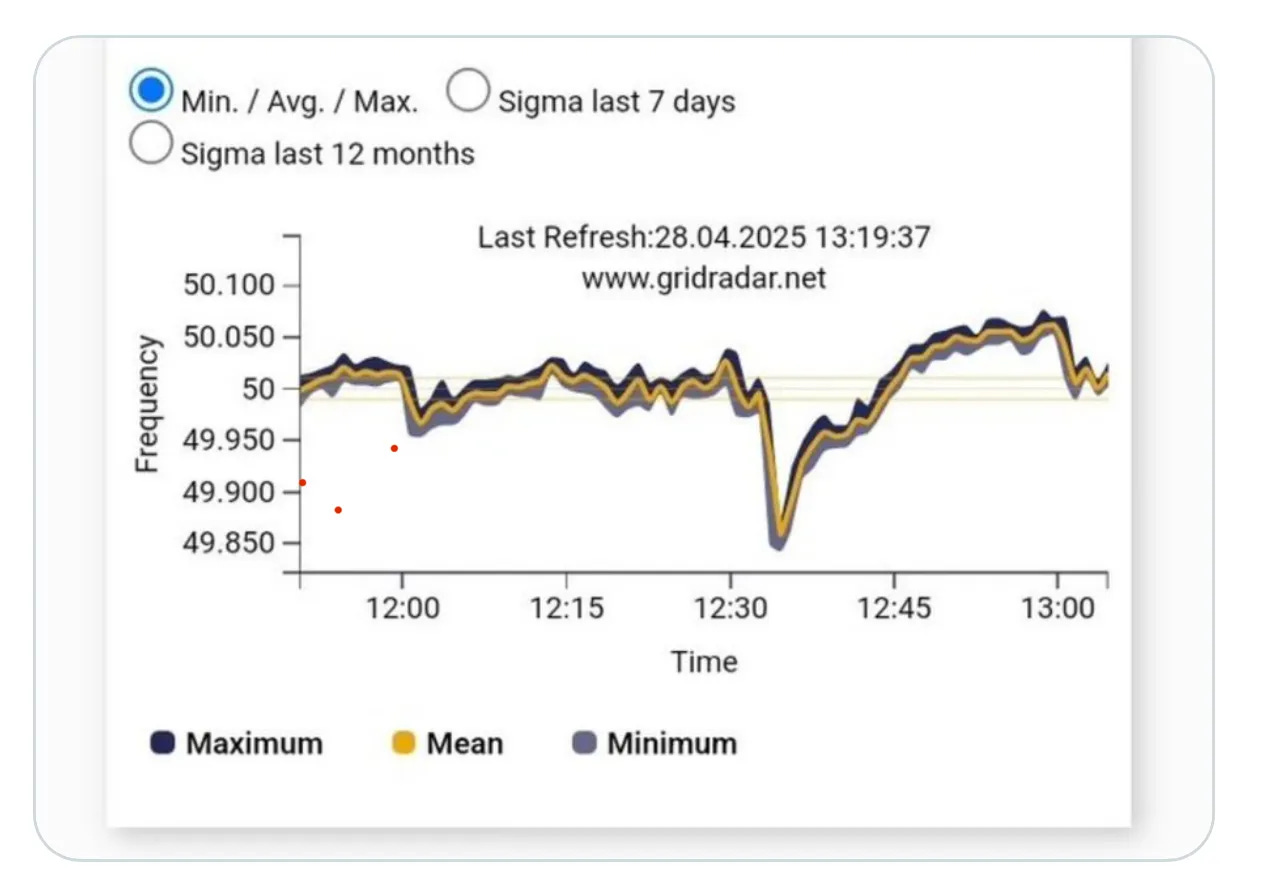

Aurora Energy analysts said the frequency of the grid dropped from the nominal 50Hz to 49.85Hz, triggering automatic emergency protocols.

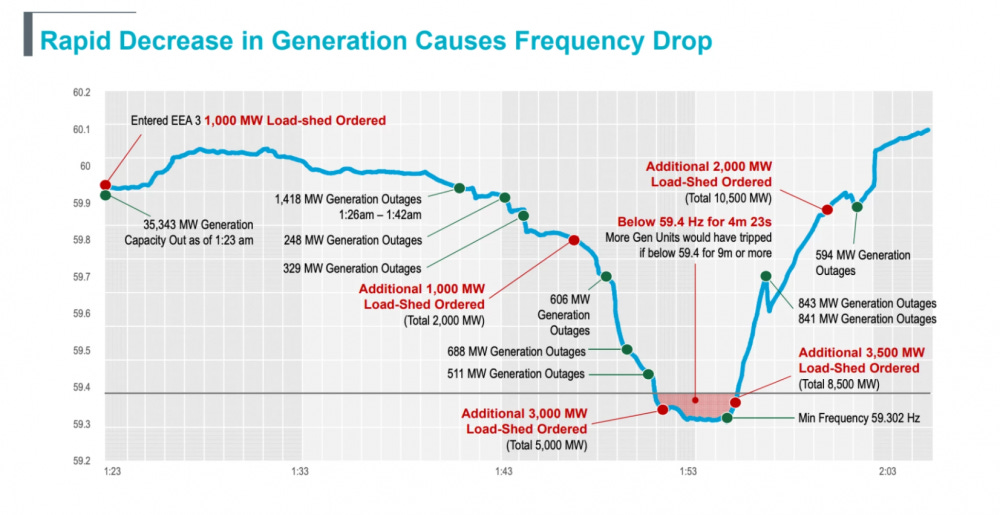

A drop in Spain’s grid frequency caused energy generators all over the country to shut down automatically based on pre-determined operating thresholds. This outage also spread to Portugal, which was importing electricity from Spain at the time. To those in the power systems universe, this screenshot is worth a million words:

I want you to focus on the dip in the middle. Yes, that is a drop of just 0.15Hz, or 0.3% of Spain’s baseline 50Hz operating frequency. However, this brief blip is among a grid operator’s worst nightmares. To understand why, we need to first understand the history and importance of grid operating frequencies.

The origins of grid frequency

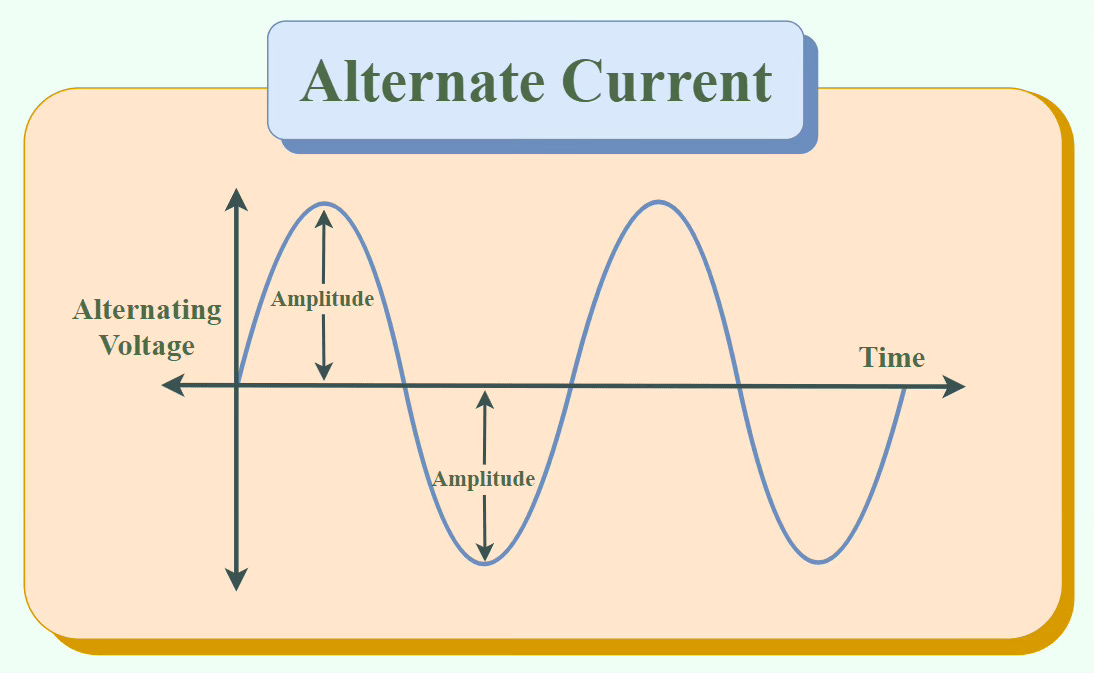

In the late 1800s, alternating current (AC) beat out direct current (DC) as the preferred method of transmitting electricity. This is because AC voltage can easily be stepped up/down using transformers, allowing for efficient energy transfer. As the name suggests, AC involves cycling electricity at a fixed rate. This rate, or frequency, is measured in hertz (Hz), representing the number of cycles per second.

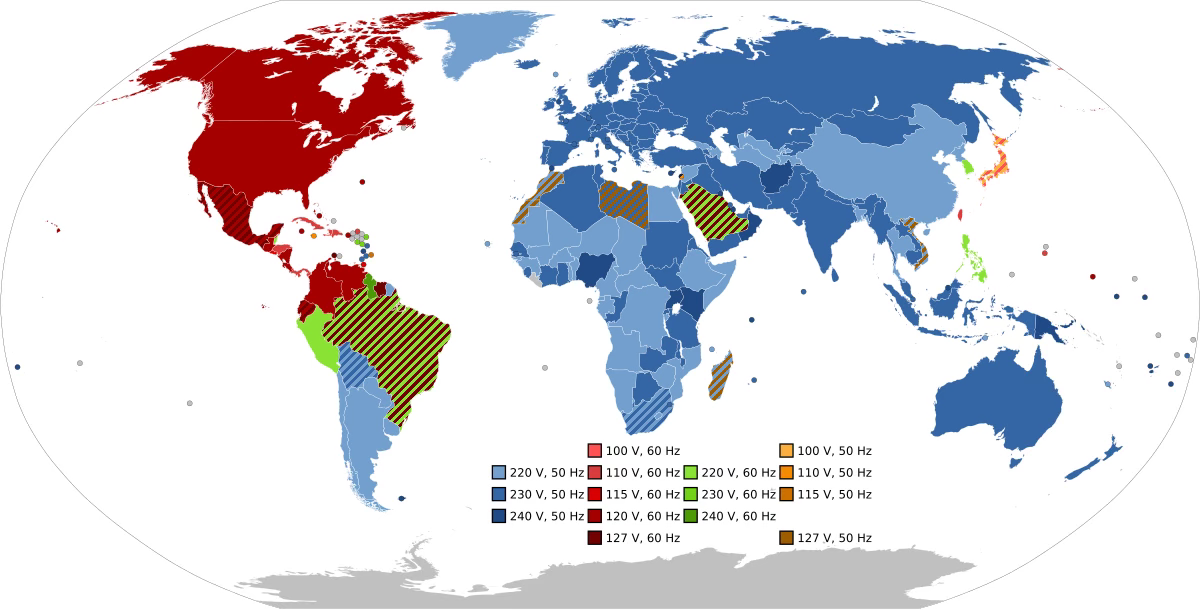

Initially, network operators used several frequencies in a single grid, usually driven by individual design conveniences of steam engines, water turbines, and electrical generators. However, as electricity systems grew, it became clear that each electrical network required one common frequency to maximise performance of electrical appliances and minimise synchronisation issues that would occur between mismatched generation and consumption endpoints like households and factories. This frequency had to be high enough to avoid flickering lights but not high enough to damage motors.

Owing to various regional and historical idiosyncrasies, countries settled on either 50Hz or 60Hz as operating grid frequencies by the mid-1950s. Over a century of global grid operations and research tells us that there is no singular optimal operating frequency, as both 50Hz and 60Hz possess unique advantages and challenges. What is non-negotiable, however, is that a grid must maintain its frequency at all times.

Why we need a steady grid frequency

Modern electrical grids are designed around one core principle: balance. At any given point, electricity generation must exactly match the load demand. A grid’s frequency is its instant status signal, directly indicating whether the amount of power being generated by power plants precisely matches the amount of power being used by homes, businesses, and industries.

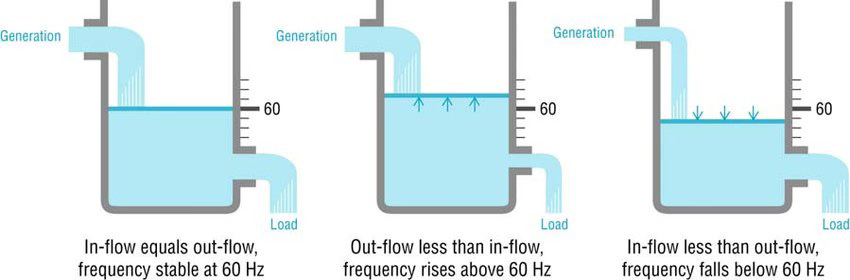

Think of an electrical power system as a water tank with an inlet and outlet:

Water flowing in (generation): This represents the electricity being produced by power plants (coal, gas, solar, etc.).

Water flowing out (consumption): This represents the electricity being consumed by everyone.

Water level (frequency): The water level in the tank represents the grid's operating frequency. Here, the baseline frequency is set at 60Hz.

With this, three possible scenarios exist:

Power generated = power used: The water flowing in perfectly matches the water flowing out. The water level stays steady, and the grid frequency is stable (at 60 Hz).

Power generated > power used : More water flows in than flows out. The water level rises and the grid frequency goes up (above 60 Hz).

Power generated < power used: Less water flows in than flows out. The water level drops and the grid frequency goes down (below 60 Hz).

Maintaining a fixed electrical grid frequency is key to ensure the reliable operation of modern power systems and the proper functioning of connected devices, from industrial motors to household appliances. Current European engineering guidelines recommend a normal operating range of ±0.2Hz, with several sub-thresholds identified as trigger points for various types of generators to turn on/off.

For context, the 2021 Texas outage was first triggered by a 0.1Hz drop that set off a series of events leading to a staggering 0.7Hz drop, ~1.1% below the American 60Hz standard. This is as close to a worst-case scenario as it gets, and you can read more about how the Texas grid was literally 4 minutes away from a total collapse.

The impact of renewable energy on grid frequency stability

The increasing share of solar and wind energy generation poses two unique challenges for system operators attempting to maintain frequency stability:

Solar and wind generation depend on weather conditions, leading to rapid changes in power output. These fluctuations cause supply-demand imbalances, directly affecting real-time grid frequency.

Unlike conventional power plants, which provide stabilising inertia through large rotating turbines, solar and wind farms connect to the grid via inverters with little or no inertia. This makes the grid more sensitive to frequency deviations.

To be clear, neither issue is a drawback of solar/wind energy generation itself, and both issues are easily solvable. The US National Renewable Energy Laboratory (NREL), considered the premier global institution for fundamental energy research, published a fantastic report in 2020 that offers valuable insights on this very issue:

Replacing conventional generators with inverter-based resources, including wind, solar, and certain types of energy storage has two counterbalancing effects. First, these resources decrease the amount of inertia available. But second, these resources can respond much faster than conventional resources, reducing the amount of inertia actually needed—and thus addressing the first impact.

The combination of inertia and mechanical frequency response can be replaced […] with electronic-based frequency response from inverter-based resources and fast response from loads, while maintaining system reliability. Given these solutions, reduced inertia is not an inherent barrier to increased deployment of wind and solar energy. Our reliance on inertia to date results largely from the legacy use of synchronous generators.

Although growth in inverter-based resources will reduce the amount of grid inertia, there are multiple solutions for maintaining or improving system reliability—so declines in inertia do not pose significant technical or economic barriers to significant growth in wind, solar, and storage […]

There you go. We have been aware of the impact of solar/wind energy on operating frequencies for decades, and modern power electronics are so good that there are entire electricity markets catered towards maintaining grid frequencies on a 0.1s timescale. Modern grid outages in areas like Spain with high renewable energy generation are far more likely to be caused by inadequate frequency response and energy storage mechanisms than by the way energy is generated.

Like many fellow energy geeks, I am curious to read the official engineering reports and studies that examine the Spanish power outage before making any assertions, unlike some sensationalist headlines that have somehow already declared the Spanish power outage to be caused solely by renewable energy generation. Ultimately, understanding what went wrong in Spain will shape how we build smarter, more resilient energy systems worldwide.

If you’d like to learn more about how operators restart power grids after outages, check out this video by the wonderful Real Engineering: